I remember the days trying to write my own song while playing in a band. Believe me, it's not easy if you don't have any talent that I don't. So I basically ended up with some useless words in a paper. Many years passed, I started a journey into Deep Learning. Now, I can write lyrics. I, moreover, write so fast, I mean really fast, in just a moment. Here's one of them that I wrote in 1 second, what do you think?

Not too bad, right? Honestly, I didn't write this but I created the program to write this, it's something. I utilized Recurrent Neural Networks (RNNs) to do this. If you know what Deep Learning, Machine Learning are, you've probably heard of it, too. I simply created a deep learning model with some LSTM cells, fed it with some data, waited for a long time, and enjoy the magic happens. In order to explain in a non-technical way, I taught my computer how to write lyrics by showing thousands of already written lyrics. But it learned without learning English grammar unlike we all did, that's why it sometimes did rookie mistakes in writing. I'll show some lyrics from the model's early learning phase to explain this in the latter part of this post.

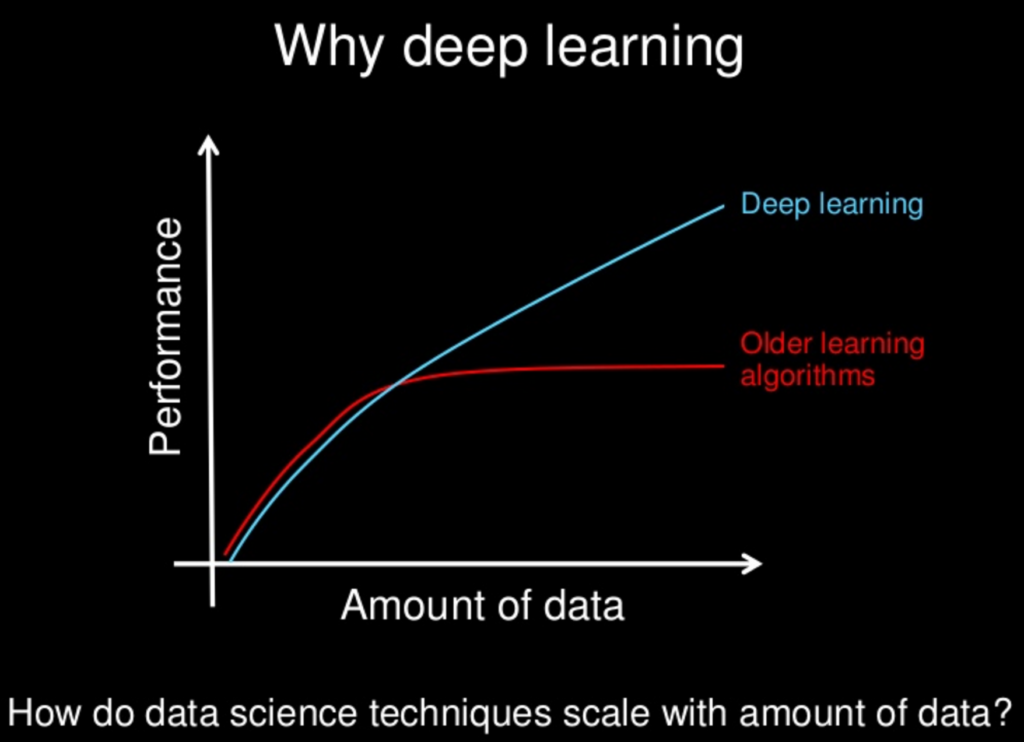

This is why Deep Learning is beautiful - Source

This is why Deep Learning is beautiful - Source

The things I learned in Deep Learning Nanodegree at Udacity helped me a lot in model training phase. Additionally, I combined other tools throughout the project such as TensorFlow, GPU-accelerated computing, NumPy, AWS, Flask, and Bootstrap.

Data the Fetching

Data is the most important element in almost all machine learning applications. You can create your own awesome algorithm if you're smart enough, but you can't create data. You have to get it from somewhere, fetch it or need to collect by yourself. I believe one of the reasons why Google open-sourced TensorFlow, one of its most important algorithms, is in line with this logic.

I used lyrics from some of the greatest metal bands for my training set. In addition to the Big Four, lyrics from Iron Maiden, Judas Priest, Pantera, Testament, Manowar are located in my training set. This may also cause British and American specific terms to be generated in one lyric. And correct, I'm a huge metal fan.

The Big Four, June 2010 – Source

The Big Four, June 2010 – Source

Surely, I spent a lot of time to collect, clean, pre-process the data but no need to get in details for these for now.

...And Parameters for Fitting

Another important aspect of machine learning applications is to choose the right parameters. Even though there are some rules of thumb, intuitive selection methods for hyperparameter optimization there is nothing such as one size fits all solution.

The question of whether a computer can think is no more interesting than the question of whether a submarine can swim. ― Edsger W. Dijkstra

Computers are getting better at learning and come up with a great prediction. However, a human still needs give the right parameters for some problems and humans make mistakes. I did while choosing parameters. But I got the right hyperparameters at the end, here they are if you're interested.

batch_size = 100 # batch size for each training pass

num_steps = 100 # number of sequence steps to keep in the input

lstm_size = 512 # node size in layers

num_layers = 2 # number of layers in network

learning_rate = 0.001 # step size in learning

epoch = 40 # how many times to reiterate over the entire training set

keep_prob_train = 0.7 # ratio of nodes to keep in each layer pass

top_n = 4 # random choice list size for next char

I think the coolest one here is top_n. It may not be considered as a hyperparameter because I use it in the lyric generation part, not in the training. Anyway, let's say everything is done and the model starts generating characters. The model only keeps the top 4 characters to pick and picks one of them randomly. This enables variety in output lyrics. If it's too small, possible lyrics generated would be few. If it's too large, then the model may pick some characters which shouldn't be there semantically.

Let's say the state is "I'm a good ..." for an example to explain this in words,

And the possible next words with their probabilities,

| Order | Next Word | Probability |

|---|---|---|

| 1 | person | 0.88 |

| 2 | guy | 0.78 |

| 3 | engineer | 0.65 |

| 4 | horse | 0.28 |

| 5 | nevertheless | 0.07 |

| ... | ... | ... |

Assuming top_n = 4, the model picks person, guy, engineer, and horse, then assigns one of them randomly as the next word. It may still choose horse but it's not grammatically wrong, it may also be semantically correct depending on the context. However, it shouldn't choose nevertheless in any case, small top_n prevents this. Large top_n, on the other hand, provides the model more choices for the next word. It's a trade-off like hyperparameters.

Train 'Em All

This part took a long time. I sometimes spent 20 hours to train a model. Then I've seen the light of GPU's power. TensorFlow supports GPU as well if you have the supported device. Luckily, I have, otherwise this post will be published next year. To establish the communication between your driver, the graphic card and TensorFlow is not a easy process though. I may write a entire post just for this. After I got everything is running, my training speed was about 20 times faster.

But you know, nobody starts constructing grammatically perfect sentences when they're 2 years old, right? Why would we expect the same from a model?

This is a lyric from the model after 200 iterations,

I know it looks like written by a toddler. How about this one after 1000 iterations,

Much better, at least there are a few meaningful words. Look this one after 4000 iterations,

Now all words have meanings, but sentences need to be improved semantically.

Thus, I was able to generate reasonable lyrics after about 10,000 iterations. I still see some poorly wordings or grammatical errors. I'm still working on it, who knows, I may come up with some good lyrics to motivate me to rebuild the band in the future...

But the real point here, even I was able to create okay metal lyrics in a few days, I really can't imagine what AI brings us after some decades. Search for this, they've already started to generate music.

The Fun Part

Here's the fun part. I also created a web app for this project to give everyone a chance to experience to create their own metal lyrics in a moment, Lyrics-Gen. Feel free to play around.

Or, you can see the incomplete repository here for the model details.

Please reach me for any questions, comments, suggestions or just to say Hi!

Lastly, share it if you like this project;